Clustered systems provide reliability, scalability, and availability to critical production services. Redhat cluster suite can be used in many configurations in order to provide high availability, scalability, load balancing, file sharing, high performance.

Clustered systems provide reliability, scalability, and availability to critical production services. Redhat cluster suite can be used in many configurations in order to provide high availability, scalability, load balancing, file sharing, high performance.

A cluster is two or more computers (called nodes or members) that work together to perform a task. There are four major types of clusters:

- Storage

- High availability

- Load balancing

- High performance

Storage clusters provide a consistent file system image across servers in a cluster, allowing the servers to simultaneously read and write to a single shared file system. A storage cluster simplifies storage administration by limiting the installation and patching of applications to one file system. Also, with a cluster-wide file system, a storage cluster eliminates the need for redundant copies of application data and simplifies backup and disaster recovery. Red Hat Cluster Suite provides storage clustering through Red Hat GFS.

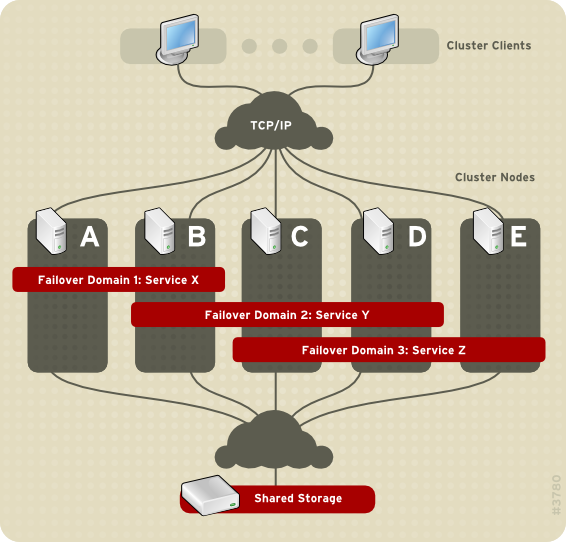

High-availability clusters provide continuous availability of services by eliminating single points of failure and by failing over services from one cluster node to another in case a node becomes inoperative. Typically, services in a high-availability cluster read and write data (via read-write mounted file systems). Therefore, a high-availability cluster must maintain data integrity as one cluster node takes over control of a service from another cluster node. Node failures in a high-availability cluster are not visible from clients outside the cluster. (High-availability clusters are sometimes referred to as failover clusters.) Red Hat Cluster Suite provides high-availability clustering through its High-availability Service Management component.

Load-balancing clusters dispatch network service requests to multiple cluster nodes to balance the request load among the cluster nodes. Load balancing provides cost-effective scalability because you can match the number of nodes according to load requirements. If a node in a load-balancing cluster becomes inoperative, the load-balancing software detects the failure and redirects requests to other cluster nodes. Node failures in a load-balancing cluster are not visible from clients outside the cluster. Red Hat Cluster Suite provides load-balancing through LVS (Linux Virtual Server).

High-performance clusters use cluster nodes to perform concurrent calculations. A high-performance cluster allows applications to work in parallel, therefore enhancing the performance of the applications. (High performance clusters are also referred to as computational clusters or grid computing.)

Red Hat Cluster Suite (RHCS) is an integrated set of software components that can be deployed in a variety of configurations to suit your needs for performance, high-availability, load balancing, scalability, file sharing, and economy.

- Cluster Infrasrtucture

file management, membership management, lock managemenet, fencing

- High Availability service management

service monitoring/failover

- RH GFS

shared storage

- Cluster logical volume management

An ancillary component of GFS that exports blocklevel storage to Ethernet.

This is an economical way to make block-level storage available to Red Hat GFS

- Cluster administration tools

Configuration and management tools for setting up, configuring,

and managing a Red Hat cluster.

- Linux virtual server.

IP load balancing component.

Cluster infastructure

Cluster Management

It's the main component of RHCS. It controls cluster membership with one of the following ways:

- Cluster Manager ( CMAN )

The cluster manager keeps track of cluster quorum by monitoring the count of cluster nodes that run cluster manager. (In a CMAN cluster, all cluster nodes run cluster manager; in a GULM cluster only the GULM servers run cluster manager.) If more than half the nodes that run cluster manager are active, the cluster has quorum. If half the nodes that run cluster manager (or fewer) are active, the cluster does not have quorum, and all cluster activity is stopped. Cluster quorum prevents the occurrence of a "split-brain" condition — a condition where two instances of the same cluster are running. A split-brain condition would allow each cluster instance to access cluster resources without knowledge of the other cluster instance, resulting in corrupted cluster integrity.

In a CMAN cluster, quorum is determined by communication of heartbeats among cluster nodes via Ethernet. Optionally, quorum can be determined by a combination of communicating heartbeats via Ethernet and through a quorum disk. For quorum via Ethernet, quorum consists of 50 percent of the node votes plus 1. For quorum via quorum disk, quorum consists of user-specified conditions.

In a CMAN cluster, by default each node has one quorum vote for establishing quorum. Optionally, you can configure each node to have more than one vote.

- Grand unified lock manager (GULM).

In a GULM cluster, the quorum consists of a majority of nodes designated as GULM servers according to the number of GULM servers configured:

-

Configured with one GULM server — Quorum equals one GULM server.

-

Configured with three GULM servers — Quorum equals two GULM servers.

-

Configured with five GULM servers — Quorum equals three GULM servers.

The cluster manager keeps track of membership by monitoring heartbeat messages from other cluster nodes. When cluster membership changes, the cluster manager notifies the other infrastructure components, which then take appropriate action.

Lock Management

Lock management is a common cluster-infrastructure service that provides a mechanism for other cluster infrastructure components to synchronize their access to shared resources. In a Red Hat cluster, one of the following Red Hat Cluster Suite components operates as the lock manager: DLM (Distributed Lock Manager) or GULM (Grand Unified Lock Manager). The major difference between the two lock managers is that DLM is a distributed lock manager and GULM is a client-server lock manager. DLM runs in each cluster node; lock management is distributed across all nodes in the cluster.

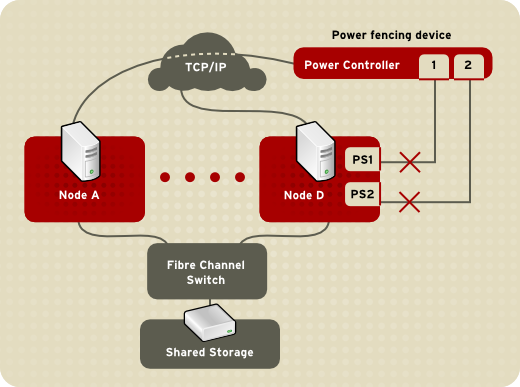

Fencing

Fencing is the disconnection of a node from the cluster's shared storage. Fencing cuts off I/O from shared storage, thus ensuring data integrity.

The cluster infrastructure performs fencing through one of the following programs according to the type of cluster manager and lock manager that is configured:

-

Configured with CMAN/DLM — fenced, the fence daemon, performs fencing.

-

Configured with GULM servers — GULM performs fencing.

When the cluster manager determines that a node has failed, it communicates to other cluster-infrastructure components that the node has failed. The fencing program (either fenced or GULM), when notified of the failure, fences the failed node. Other cluster-infrastructure components determine what actions to take — that is, they perform any recovery that needs to done. For example, DLM and GFS (in a cluster configured with CMAN/DLM), when notified of a node failure, suspend activity until they detect that the fencing program has completed fencing the failed node. Upon confirmation that the failed node is fenced, DLM and GFS perform recovery. DLM releases locks of the failed node; GFS recovers the journal of the failed node.

The fencing program determines from the cluster configuration file which fencing method to use. Two key elements in the cluster configuration file define a fencing method: fencing agent and fencing device. The fencing program makes a call to a fencing agent specified in the cluster configuration file. The fencing agent, in turn, fences the node via a fencing device. When fencing is complete, the fencing program notifies the cluster manager.

Red Hat Cluster Suite provides a variety of fencing methods:

-

Power fencing — A fencing method that uses a power controller to power off an inoperable node

-

Fibre Channel switch fencing — A fencing method that disables the Fibre Channel port that connects storage to an inoperable node

-

GNBD fencing — A fencing method that disables an inoperable node's access to a GNBD server

-

Other fencing — Several other fencing methods that disable I/O or power of an inoperable node, including IBM Bladecenters, PAP, DRAC/MC, HP ILO, IPMI, IBM RSA II, and others

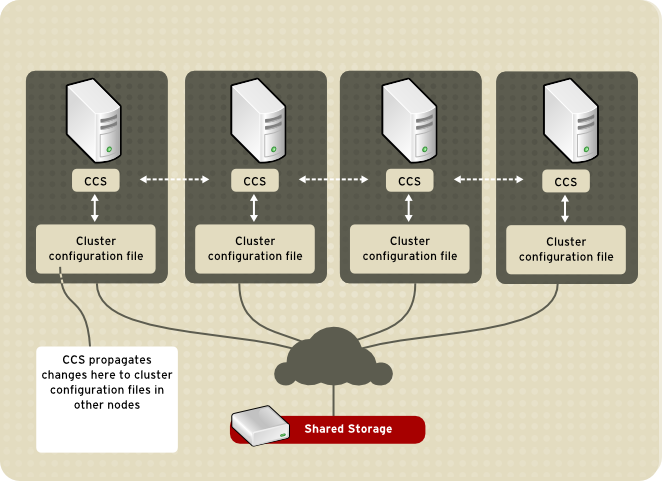

Cluster configuration system

The Cluster Configuration System (CCS) manages the cluster configuration and provides configuration information to other cluster components in a Red Hat cluster. CCS runs in each cluster node and makes sure that the cluster configuration file in each cluster node is up to date. For example, if a cluster system administrator updates the configuration file in Node A, CCS propagates the update from Node A to the other nodes in the cluster.

The cluster configuration file (/etc/cluster/cluster.conf) is an XML file that describes the following cluster characteristics:

-

Cluster name — Displays the cluster name, cluster configuration file revision level, locking type (either DLM or GULM), and basic fence timing properties used when a node joins a cluster or is fenced from the cluster.

-

Cluster — Displays each node of the cluster, specifying node name, number of quorum votes, and fencing method for that node.

-

Fence Device — Displays fence devices in the cluster. Parameters vary according to the type of fence device. For example for a power controller used as a fence device, the cluster configuration defines the name of the power controller, its IP address, login, and password.

-

Managed Resources — Displays resources required to create cluster services. Managed resources includes the definition of failover domains, resources (for example an IP address), and services. Together the managed resources define cluster services and failover behavior of the cluster services.

Cluster Administration

We have 3 alternatives to administer the cluster:

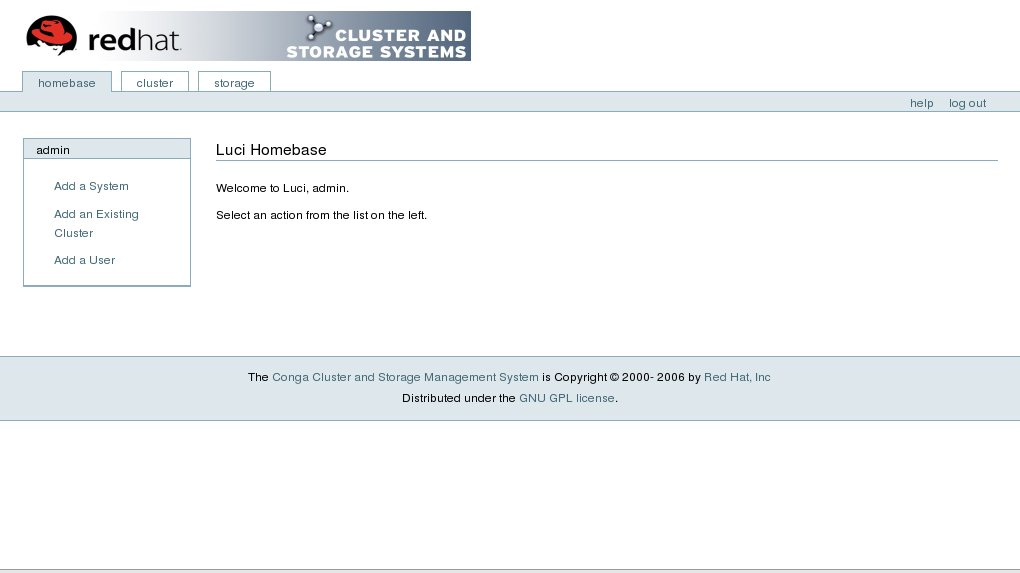

- Conga

Conga uses 1 daemon (luci) which runs in one node and serves the administration web interface. It communicates with other nodes through ricci daemon.

- Cluster administration GUI

This section provides an overview of the cluster administration graphical user interface (GUI) available with Red Hat Cluster Suite — system-config-cluster. The GUI is for use with the cluster infrastructure and the high-availability service management components.

The GUI consists of two major functions: the Cluster Configuration Tool and the Cluster Status Tool. The Cluster Configuration Tool provides the capability to create, edit, and propagate the cluster configuration file (/etc/cluster/cluster.conf). The Cluster Status Tool provides the capability to manage high-availability services.

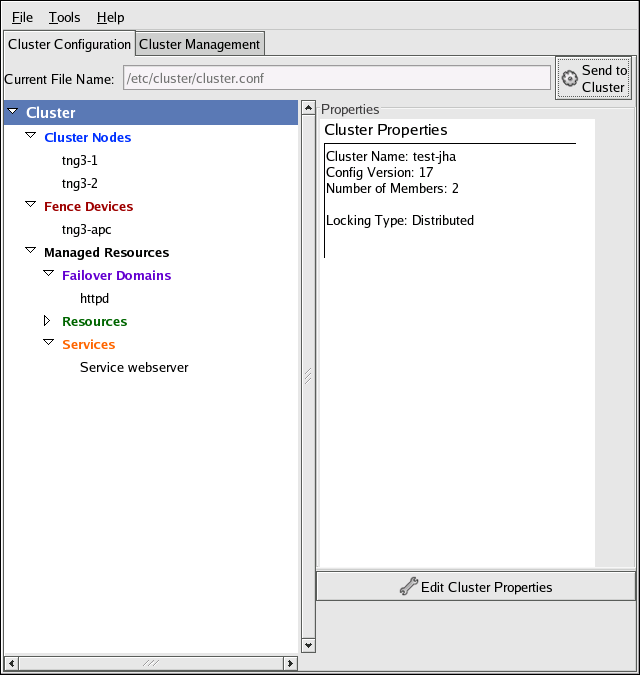

You can access the Cluster Configuration Tool through the Cluster Configuration tab in the Cluster Administration GUI.

The Cluster Configuration Tool represents cluster configuration components in the configuration file (/etc/cluster/cluster.conf) with a hierarchical graphical display in the left panel. A triangle icon to the left of a component name indicates that the component has one or more subordinate components assigned to it. Clicking the triangle icon expands and collapses the portion of the tree below a component. The components displayed in the GUI are summarized as follows:

-

Cluster Nodes — Displays cluster nodes. Nodes are represented by name as subordinate elements under Cluster Nodes. Using configuration buttons at the bottom of the right frame (below Properties), you can add nodes, delete nodes, edit node properties, and configure fencing methods for each node.

-

Fence Devices — Displays fence devices. Fence devices are represented as subordinate elements under Fence Devices. Using configuration buttons at the bottom of the right frame (below Properties), you can add fence devices, delete fence devices, and edit fence-device properties. Fence devices must be defined before you can configure fencing (with the Manage Fencing For This Node button) for each node.

-

Managed Resources — Displays failover domains, resources, and services.

-

Failover Domains — For configuring one or more subsets of cluster nodes used to run a high-availability service in the event of a node failure. Failover domains are represented as subordinate elements under Failover Domains. Using configuration buttons at the bottom of the right frame (below Properties), you can create failover domains (when Failover Domains is selected) or edit failover domain properties (when a failover domain is selected).

-

Resources — For configuring shared resources to be used by high-availability services. Shared resources consist of file systems, IP addresses, NFS mounts and exports, and user-created scripts that are available to any high-availability service in the cluster. Resources are represented as subordinate elements under Resources. Using configuration buttons at the bottom of the right frame (below Properties), you can create resources (when Resources is selected) or edit resource properties (when a resource is selected).

- Services — For creating and configuring high-availability services. A service is configured by assigning resources (shared or private), assigning a failover domain, and defining a recovery policy for the service. Services are represented as subordinate elements under Services. Using configuration buttons at the bottom of the right frame (below Properties), you can create services (when Services is selected) or edit service properties (when a service is selected).

-

The Cluster Configuration Tool provides the capability to configure private resources, also. A private resource is a resource that is configured for use with only one service. You can configure a private resource within a Service component in the GUI.

- Cluster Status Tool

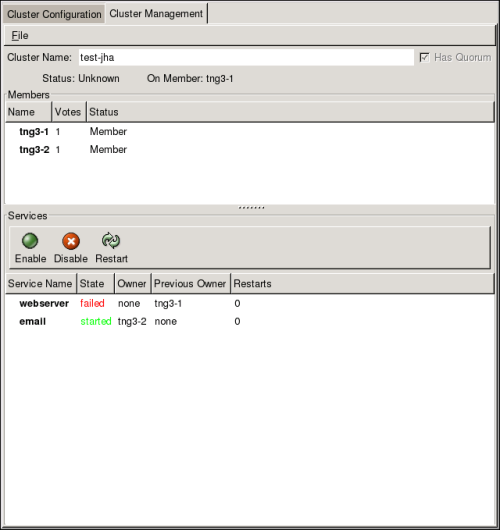

You can access the Cluster Status Tool through the Cluster Management tab in Cluster Administration GUI.

The nodes and services displayed in the Cluster Status Tool are determined by the cluster configuration file (/etc/cluster/cluster.conf). You can use the Cluster Status Tool to enable, disable, restart, or relocate a high-availability service. The Cluster Status Tool displays the current cluster status in the Services area and automatically updates the status every 10 seconds.

To enable a service, you can select the service in the Services area and click Enable. To disable a service, you can select the service in the Services area and click Disable. To restart a service, you can select the service in the Services area and click Restart. To relocate a service from one node to another, you can drag the service to another node and drop the service onto that node. Relocating a node restarts the service on that node. (Relocating a service to its current node — that is, dragging a service to its current node and dropping the service onto that node — restarts the service.)

The following tables describe the members and services status information displayed by the Cluster Status Tool.

| Members Status | Description | ||

|---|---|---|---|

| Member |

|

||

| Dead | The node is unable to participate as a cluster member. The most basic cluster software is not running on the node. |

Table Members Status

| Services Status | Description |

|---|---|

| Started | The service resources are configured and available on the cluster system that owns the service. |

| Pending | The service has failed on a member and is pending start on another member. |

| Disabled | The service has been disabled, and does not have an assigned owner. A disabled service is never restarted automatically by the cluster. |

| Stopped | The service is not running; it is waiting for a member capable of starting the service. A service remains in the stopped state if autostart is disabled. |

| Failed | The service has failed to start on the cluster and cannot successfully stop the service. A failed service is never restarted automatically by the cluster. |

- Command line tools

In addition to the Cluster Administration GUI, command line tools are available for administering the cluster infrastructure and the high-availability service management components. The command line tools are used by the Cluster Administration GUI and init scripts supplied by Red Hat. The following table summarizes the command line tools.

| Command Line Tool | Used With | Purpose |

|---|---|---|

| ccs_tool — Cluster Configuration System Tool | Cluster Infrastructure | ccs_tool is a program for making online updates to the cluster configuration file. It provides the capability to create and modify cluster infrastructure components (for example, creating a cluster, adding and removing a node). For more information about this tool, refer to the ccs_tool(8) man page. |

| cman_tool — Cluster Management Tool | Cluster Infrastructure | cman_tool is a program that manages the CMAN cluster manager. It provides the capability to join a cluster, leave a cluster, kill a node, or change the expected quorum votes of a node in a cluster. For more information about this tool, refer to the cman_tool(8) man page. |

| fence_tool — Fence Tool | Cluster Infrastructure | fence_tool is a program used to join or leave the default fence domain. Specifically, it starts the fence daemon (fenced) to join the domain and kills fenced to leave the domain. For more information about this tool, refer to the fence_tool(8) man page. |

| clustat — Cluster Status Utility | High-availability Service Management Components | The clustat command displays the status of the cluster. It shows membership information, quorum view, and the state of all configured user services. For more information about this tool, refer to the clustat(8) man page. |

| clusvcadm — Cluster User Service Administration Utility | High-availability Service Management Components | The clusvcadm command allows you to enable, disable, relocate, and restart high-availability services in a cluster. For more information about this tool, refer to the clusvcadm(8) man page. |

Cluster Services

Monitoring and failover of cluster services can be done using simple scripts/binaries. Cluster can/need to receive 3 responses from those scripts: start, stop and status. If the script is called with the status parameter, it should have exit status 0 for OK and exit status 1 for not OK.

So, if we want to have apache as a clustered service, we can use its default init script (under /etc/init.d path).

Installation

In order to install all required packages in a Redhat RHEL 4 system we can use the following command:

# up2date --force --installall=rhel-i386-as-4-cluster

Operations

In order to relocate a service to another node we can use the cluster administration gui invoked with the command

system-config-cluster

or we can issue the command

clusvcadm -r <service name> -m <destination node>

In order to see cluster status:

clustat -l

We can examine all cluster logs through /var/log/messages.

Manual Start / Stop Cluster Services

In order to start cluster services:

service ccsd start

service qdiskd start

service cman start

service fenced start

service rgmanager start

In order to stop cluster services:

service qdiskd stop

service rgmanager stop

service fenced stop

service cman stop

service ccsd stop

Updating cluster.conf

To update the config file in a running cluster:

- Have all nodes running as cluster members using the original cluster.conf.

- On one node, update /etc/cluster/cluster.conf, incrementing config_version.

- On the same node run

ccs_tool update /etc/cluster/cluster.conf

This instructs the system to propagate the new cluster.conf to all nodes in the cluster.

- Verify that the new cluster.conf exists on all nodes.

- On the same node run

cman_tool version -r <new config_version>

This informs the cluster manager of the version number change.

- Check

cman_tool status

to verify the new config version.

You can also change the configuration to 1 node from cluster gui and then click on the button "Send To Cluster" to propagate the changes to other nodes.

References

https://www.redhat.com/docs/manuals/csgfs/browse/rh-cms-desc-ov-en/index.html

http://sources.redhat.com/cluster/faq.html

Special thanks to Christos Psonis (Χρήστος Ψώνης) for contributing in this article.